SCC

Brasil

os cloud gurus

Software Cloud Consulting

Your software development, cloud, consulting & shoring company

Cost optimization strategies

By Wolfgang Unger

How can I optimize costs on AWS?

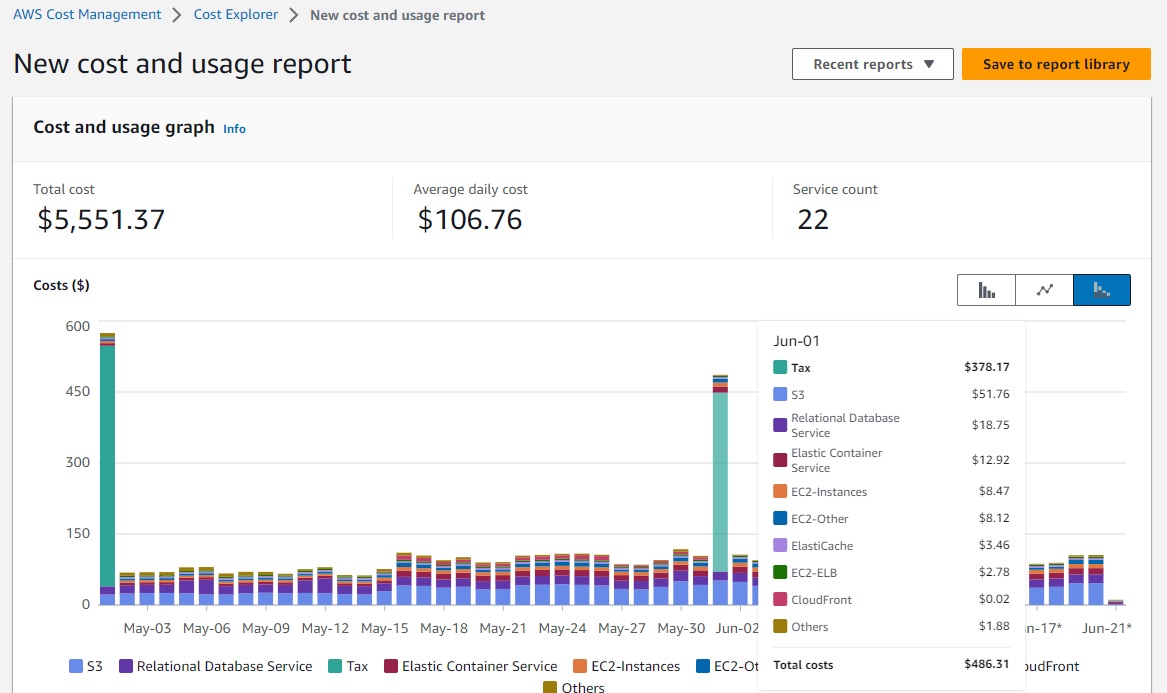

Before we take a look how to save costs on AWS in a generic approach - since any

customer got different services running - your should take a closer look on your costs and

get an overview what are the cost expensive services in your account(s).

Initial Cost Analysis

You can use the AWS Cost Explorer to analyze usage and costs. There are are lot of filters to get a good overview on your expenses.

You can aggregate by Account our AWS Service level on high level but also filter on resource level like EC2 instance ID .

Try to figure out what are the most expensive resources or resource groups, this is where your should try to consider changes.

If you spend 4 $ monthly on Lambdas, it would be a waste of time, to develope or even consider measures to lower these costs.

You should for example know after your analyses:

EC2 Computing Costs 600$

Data transfer costs 120$

Storage Costs 400$

etc

Now we can take a closer look on the possibilities to save costs on AWS

Compute

Rightsizing

Use Trusted Advisor to find Low Utilization EC2 instances or RDS Idle InstancesAlso use the Cloud Watch Metrics to check if your instances are oversized.

On Premise Admistrators tend to keep CPU Utilization low on the servers, to extend the lifespan, since the server is company property.

The average Utilization there is about 30%. You don't need to follow this approach with cloud instances, if the instance still performs good with 90% CPU Utilization, no need to upsize. Keep them under load, you pay for 100% Utilization, not just for 35%.

Autoscaling

Use horizantal scaling, not vertical scaling.On Premise you cannot just launch new servers if it is Black Friday.

You have to calculate your usage on Peak time and do the guesswork to buy servers for your racks.

So normally there are oversized, run on low Utilization to be able to still work in the Peak times.

On AWS you should fine the right instance size for running your application without problems but with a high Utilization.

If you need more Compute Power, you can launch new instances with Autoscaling, based on metrics like CPU or networking or SQS messages for example.

You scale out if more servers are needed and you scale in when there is only a few users on the platform.

Important numbers to finetune here are Desired Count, Miminum Count and Maximum Count.

Reduce usage hours

On Premise nobody shuts down instances.The only savings would be the electricity bill. You already paid your servers. In the cloud you pay per usage or demand.

Meaning if you use a instance just 8h a day, you can save 66% costs if you don't let it running 24h but just 8h.

Autoscaling was already mentioned you can scale down Groups of severs, who have to be online 24/7 down to 1 instance in the night, when there is low user activity.

Other instances you might shut down completly if there is no requirement for 24/7.

The instances will only be used from 8am to 6pm ? Shut them down the rest of the time.

You can automate this with tagging and lambas, systems manager or other approaches.

Please have a look in this blog and in my Github Account to find a Lambda Function to automate the instance scheduling for you

Schedule/Stop your instances by Lambda

Also terminate any unused Instances. Sometimes people launch an instance for testing something, but forgot to stop or terminate it. Stop and terminate anything which is not really needed.

For background tasks don't use 24/7 instances.

Use docker and for example AWS Batch. Fargate can also serve if you configure the task to stop after processing. If the job needs 2h to process, that's all you should pay for it.

Serverless is also a good approach on non-steady workloads.

You pay just for the processed time, not for a 24/7 instance.

This can be perfect for business logic ( from applications into lambda) or Databases linke Aurora Serverless or Dynamo.

Use Spot instances, reserved instance and saving plans

Spot instances are unused AWS instances that can cost up to 90% less than on-demand instances. You can bid on AWS instances, with the price being determined by supply and demandBut don't use them for important workloads which must not be interrupted, Spot instances may be disconnected. They don't serve for Production Databases!

Great use cases are stateless servers ( added by autoscaling to the baseline of reserved or on-demand servers ), servers for batch processes, anything which might not cause you trouble, if the servers goes down. Also consider them more in your Dev and QA environments, but not on Prodcution

With reserved Instances, you make a long-term commitment in return for a significant discount, You can save significant money using them.

AWS Savings Plans are a pricing model that offers discounted prices on regular On-Demand Instances when you commit to one or three years of use.

These are valid for EC2 instances and also for RDS instances.

For serverless computing like Fargate or Lambda your can purchase a Compute Savings Plan.

Storage

S3 usage and storage type

For S3 there is not only the Standard Storage Class availible - which is most used, because DefaultS3 Standard-Infrequently Accessed (IA) and S3 One Zone-IA classes are designed for data accessed less than once a month.

These classes are cheaper than the S3 standard, but you do sacrifice some performance.

You can access your data more quickly if you use the S3 standard storage class, but you'll pay more for that convenience.

Also Glacier is a great and cheap option for long term archive storage.

Please have a look into our blog for more information on

S3 Storage classes and intelligent tiering

Also keep in mind, that versioning is not for free, it will increase your bucket size and therefore your costs.

If you have a lot of volume in the bucket and you don't really need versioning, don't activate this feature.

EBS Volumes

With EBS Volumes you can also save a lot of money.First, delete unattached EBS Volumes.

Also delete old snapshots if no longer needed.

After stopping or terminating EC2 Instances, the attached block volumes keep running, causing costs.

You should find the unattached EBS volumes and identify whether they are necessary or not.

Rightsize your volumes, this is not just important for EC2 instances but also for RDS.

If you really run out of diskspace you still can upgrade to a bigger Volume.

Select the Right EBS types.

EBS volumes are divided into two main categories:

HDD-backed storage for throughput intensive workloads.

SSD-backed storage for transactional workloads.

Which one to use depends a lot on your use case and application type.

For example, if you are working on applications that read or write huge volumes of sequential data, you can consider HDD storage.

Both Cold and Throughput HDD volumes are over 50% cheaper than SSD storage.

Get more informations in detail in the AWS EBS documentation.

Serverless

DynamoDB

DynamoDB can become extremely expensive to use.

There are 2 pricing structures to choose from:

provisioned capacity and on-demand capacity.

You should use provisioned capacity when:

- You know the maximum workload your application will have

- Your application’s traffic is consistent and does not require scaling (there is also autoscaling available, but it costs extra)

You run better with on-demand capacity when:

- You’re not sure about the workload of your application - You don’t know how consistent your application’s data traffic will be

- The data might be accessed only sporadically

- You only want to pay for what you use

Bases on this make up your choice with this in mind:

On-demand tables are about five to six times more costly per request than provisioned tables.

So if provisioned capacity is possible, since you have kind of a constant workload, it is the better choice.

Network

VPC Setup

Please have also a look on this blog :

VPC Considerations in 2024

It is best practice not to use the default VPC in an region but to setup your own VPC instead.

Together with your other infrastructure resources with IaC.

You should take some considerations on the VPC design about costs.

For instances in private subnets to be able to connect to the internet (which is normally the case, updates etc), you need a NAT Gateway.

A NGW is placed in one Availability Zone.

So if you setup a VPC over 3 AZs and you want to have a real Multi AZ setup, you will need 3 NGWs.

Because the AZ with the NGW fails the instances in the 2 other AZs might still run, but causing troube and errors because they cannot longer access the internet.

A NAT Gateway will cost you about 35$ a month.

So if you have 3 NGWs in each stage and 3 stages, only the costs for the NGWs are ~ 315 $

If you consider a AZ failure not as the most probable event and accept the risk you can of cause stick with one NGW.

Depending on this decision your this, you might change your VPC design.

Do you want to have a VPC over 3 Availability Zones (3 public subnets & 3 private subnets) or

only 2 Availability Zones (2 public subnets & 2 private subnets) ?

The second option already can handle a failure of one AZ, so is Multi AZ.

If you want the highest possible availability, go for 3.

But if you choose one NGW per AZ, the 3 AZ VPC is 50% more expensive than the 2 AZ VPC. Keep in mind.

Use private IPs and endpoints

Traffic inside your VPC is normally without extra cost, but public traffic is always charged.

Note: Data transfer between availability Zones do cost extra, see the next bullet point.

S3 Buckets for example are per Default accessed public. Even if you access their content from within

your VPC, this you will be charged for this traffic.

But you can create a interface VPC endpoint for your S3 Buckets and this way your

AWS resources can now access the data without extra costs.

Not too many use this feature.

Also EC2 instances should always be placed in the private subnets ( if not internet facing web-servers).

First this is a must for security but it is also an issue for the network traffic costs.

If you access your instances over a public IP address, you will be charged.

For data transfer via the private IP address not.

Note: Data transfer between availability Zones do cost extra, see the next bullet point.

It is always recommended only use private IPs and subnets for communications between your apps and services or DBs.

Also have a look in the AWS Documentation about VPC interface and gateway endpoints. They might also help you saving costs.

Choose availability zones and regions under a cost aspect

Compliance might obrigate you to run your services and store your data in your region.

If not, you should take a look at the prices for AWS services in different regions and compare them.

They differ from region to region and it might be an option to save your data or run your

services in a near by and not your home region.

Keep latency in mind, but this might not affect if you for example use CloudFront as Cache, the data cached in Cloudfront will be located in Edge Locations all over the world.

Data transfer costs within AWS are charged for 2 scenarios.

- Data transfer across regions.

For example if you do S3 cross region replication or got RDS cross region replicas.

- Data tranfer within a region.

Data tranfer from one availability zone to another within a region will cost you money.

Data transfer between two Amazon EC2 instances in different availability zones within the same region is charged at $0.01 per GB.

Data transfer between an Amazon EC2 instance and an Amazon S3 bucket in the same region is charged at $0.09 per GB.

Keep this in mind when you design your system architecture.

Multi AZ is important for High availability, but it is not for free.

Try to reduce in between availability zone communication and data transfer.

For your RDS this might not be the best option, but there are use cases where you should appy this. For example Batch Jobs which will be triggered nightly.

Should the Batch environment really be Multi AZ and high available?

What if you use only 1 AZ and it becomes offline for 2 h.

Your batch jobs just can run a little later. If you can accept this, go for the cost saving approach.

If you define a Cluster Group for a High Performance Computing use case, it is anyway recommended to use a 'Cluster Placement Group' and not a 'Spread Placement group' to run your instances in only one AZ to keep latency low and achieve the maximum perfomance.

If you want to run your application servers only in one AZ, be aware this setup cannot be considered High Availability. But you could implement a Standy By AZ ( or Standby Region) and automatic failover.

The right decision here depends of course a lot on your real costs.

Therefor the Initial cost analyses is important.

If data transfer costs are 1 % of your AWS bill, no need to worry.

If it is much more and significant money, consider it also in your Multi AZ architecture.

Once you have optimized everything, don't rest and make a analyses again every month and verify you still follow all these best practices.

Monthly Analysis

Analyse monthly with Trusted Advisor and Cost Explorer

Once you have optimized your costs, don't forget to analyse it again after a while or best monthly.

Maybe your migration is still ongoing or new services and features are developed and deployed, so check your infrastructure regularly.

Setup Cost Alarms

One great way to keep on eye on your costs on AWS is to set up cost alerts which will send a notification when your monthly bill reaches a certain amount.To do this, navigate to the Billing and Cost Management section of the AWS Management Console.

Then, click on the Preferences tab and choose the Enable Cost Alerts checkbox.

Conclusion

I hope you can economize money with these tips.

If you have doubts or still need help on implementing this, don't hesitate to contact us .

Contact us

Autor

Wolfgang Unger

AWS Architect & Developer

6 x AWS Certified

1 x Azure Certified

A Cloud Guru Instructor

Certified Oracle JEE Architect

Certified Scrum Master

Certified Java Programmer

Passionate surfer & guitar player